What is ReLU activation function ?

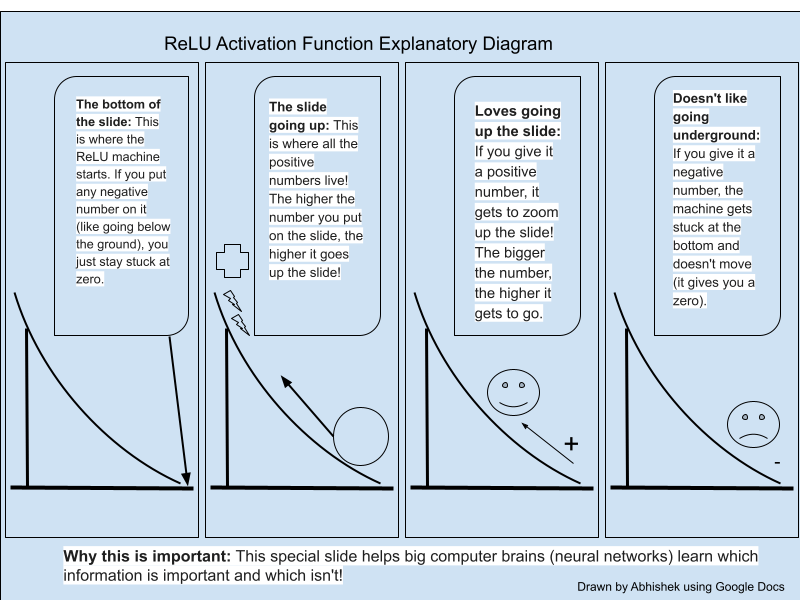

Imagine you have a machine that loves positive numbers. Let's call this machine the ReLU machine.

Happy with Positives: If you give the ReLU machine a positive number, like 5 or 10, it gets super excited and gives you the same number back! That's because it loves positive numbers.

Ignores Negatives: But if you give the ReLU machine a negative number, like -2 or -7, it gets a bit grumpy and just returns a 0. It doesn't like negative numbers at all!

Why does this machine matter?

The ReLU machine is kind of like a special helper inside a big brain made of computer code (that's what a neural network is!). This special helper makes the brain learn better and faster. It's like the machine helps the brain decide which information is important and which isn't!

The rectified linear unit (ReLU) activation function is a popular choice for activation functions in artificial neural networks. It is a piecewise linear function that introduces non-linearity into the network, which is crucial for the network to learn complex relationships in the data.

Here's how it works:

For any positive input value, the ReLU function simply outputs the same value.

For any negative input value, the ReLU function outputs zero.

Mathematically, the ReLU function can be represented as:

f(x) = max(0, x)

Here, x is the input value to the neuron.

Why is ReLU popular?

There are several reasons why ReLU is a popular choice for activation functions:

Computational efficiency: ReLU is a very simple function to compute, making it efficient for training large neural networks.

Vanishing gradient problem: ReLU helps to mitigate the vanishing gradient problem, which can occur during training deep neural networks. This problem can prevent the network from learning effectively.

Biological plausibility: ReLU has some similarities to the way biological neurons function, making it an appealing choice for some researchers.

However, ReLU also has some limitations:

Dying ReLU problem: In some cases, ReLU neurons can become "stuck" and never output a positive value. This can happen if the learning rate is too high or if the network is not properly initialized.

Not zero-centered: The outputs of the ReLU function are not zero-centered, which can make it difficult to train some types of neural networks.

Overall, ReLU is a powerful and popular activation function that has been used successfully in a wide range of deep learning applications.

Here are some additional points to consider:

There are several variations of the ReLU function, such as the Leaky ReLU, which can help to address some of the limitations of the standard ReLU function.

The choice of activation function can have a significant impact on the performance of a neural network. It is important to experiment with different activation functions to find the one that works best for your specific task.